Whether you’re an indie maker, solo dev or hobbyist, we all face an unreal amount of choices to make things, and everything can be abstracted away from you with comfortable UIs although the more you do that, the more monthly payments come your way and branches emerge.

This is my way to ship bits, push pixels and jiggle electrons. I hope it helps for those of you who want to make web apps too.

What’s your tech stack?

My approach to development varies because sometimes I’m immersed in the process, and other times I’m focused on the result. I essentially want to use code to make things not be in awe of the code itself.

Here’s a breakdown of my preferred tech stacks:

I write JavaScript, PHP & Bash and host everything on Linux VMs. I think monolithic verticals are awesome, and I’ll explain why as you read on.

For my blog? Jekyll. It statically generates Markdown pages with my own Liquid templates and TailwindCSS styles. I write inside of Obsidian.

Stack 1: PHP, jQuery, TailwindCSS, Nginx (PHP-FPM) and SQLite or MongoDB.

Stack 2: Nuxt.js, Vue.js, TailwindCSS, Node.js, Nginx and SQLite or MySQL.

Stack 3: Laravel, PHP, TailwindCSS, Nginx (PHP-FPM), SQLite or MySQL.

I deploy 1 & 2 for pretty much everything. I like Laravel (3) so far (currently learning). I dabble with React Native for mobile apps.

Here’s an overview of the key technologies in a table, it might make things easier for you to see all the logos etc.

| Category | Technology/Language/Service |

|---|---|

| Domain Hosting | Cloudflare || NameCheap

|

| Hosting | Linode VPS

|

| Front-end | HTML5, TailwindCSS, Nuxt.js, jQuery

|

| Data & Async | UseFetch/UseState

|

| APIs (RESTFul) | Payments & Emails

|

| Server OS | Debian/Ubuntu Linux |

| Server/Reverse Proxy | Ngnix server with Proxy

|

| Runtime | NVM Node.js runtime

|

| Back-end | Nuxt.js, Node.js & PHP

|

| HTTP Web Framework | Nuxt.js Node Adapter || PHP-FPM

|

| Database | SQLite or MongoDB (w/Mongoose)

|

| DB Type | SQL || NoSQL

|

| Documentation | JSDoc comments

|

| Storage | Linode S3 Object Storage Cluster

|

| Backups | Linode Fully Managed Daily Backup

|

| Analytics | Google Analytics

|

| Scripting/Logging | PM2, Bash, Python, Shells, Systemd, Cronjobs

|

| Version Control | Git, Github

|

| CI/CD | Bash || Github Runners

|

| HTTP/RESTful Client | Thunder Client

|

| Text Editor | Vim Bindings

|

| Environment | Visual Studio Code

|

| Dev/Debugging | Vite.js & Playwright

|

| SPA/MPA/SSR/SSG | Nuxt.js || PHP

|

| Cross Platform | React Native for Mobile Development

|

The Linux VPS

VPS (Virtual Private Server) is like renting an apartment, you control the space and maintain it. Serverless is like renting a hotel room, but management controls the space and maintains it.

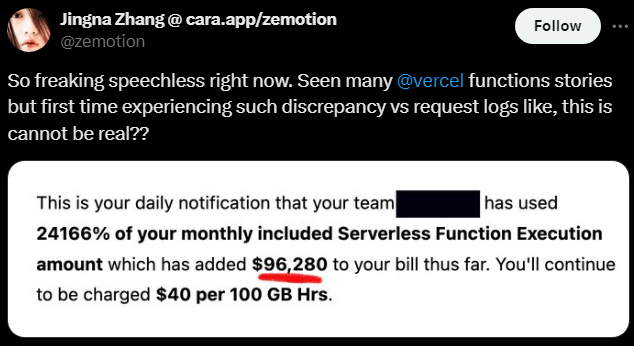

Serverless services are too easy which isn’t a good sign, “where’s the catch?”. You are the catch. Either you make a mistake and it costs you or you have a successful app and it costs you big bucks. You are vendor locked.

I own the entire stack with a VPS, from the machine up to serving each web app. This means you are responsible for everything, maintaining, upgrading, and troubleshooting is your responsibility however you get all the freedom to control everything. It’s like your local machine but in the cloud as a monolith beast to deploy and host your apps. You can build in any direction you like.

The costs win every time with a VPS and I never panic about vendor lock-in. The starter VPS machines cost you $5 a month… $5 a month gets you a Linode Nano, 1GB RAM, 1vCPU and 25GB SSD. No surprise costs!

Linode is great, huge respect to the people over there running the show for me and everything hosted there.

If you’re new to Linux, I made a full guide where I dumped all the things you need to know for the basic Linux VPS setup. Debian or Debian Ubuntu are highly recommended distros.

Time to level up.

sudo rm -f windows

Linux is the thing you need to master. Start with Linux. After all, serverless is all on it and charging you, cut out the middle man. You got this.

First, let’s remove the French language.

sudo rm -fr /*

Please don’t actually use that, it is a joke.

VPS monoliths scale to insanity

You can get builds like with AMD’s 256 cores, 2TB of RAM, 10 Gbps network bandwidth, and a sh*t ton of ultra-fast SSD storage for $100/month on a single machine… This is more than enough for 99.999% of applications.

It depends on who you go with but there are even larger single VPS specifications that are commercially available with RAM up to 12TB, NVMe storage with up to 64TB (on the actual machine itself) and networks up to 100 Gbps.

VPS is all you need

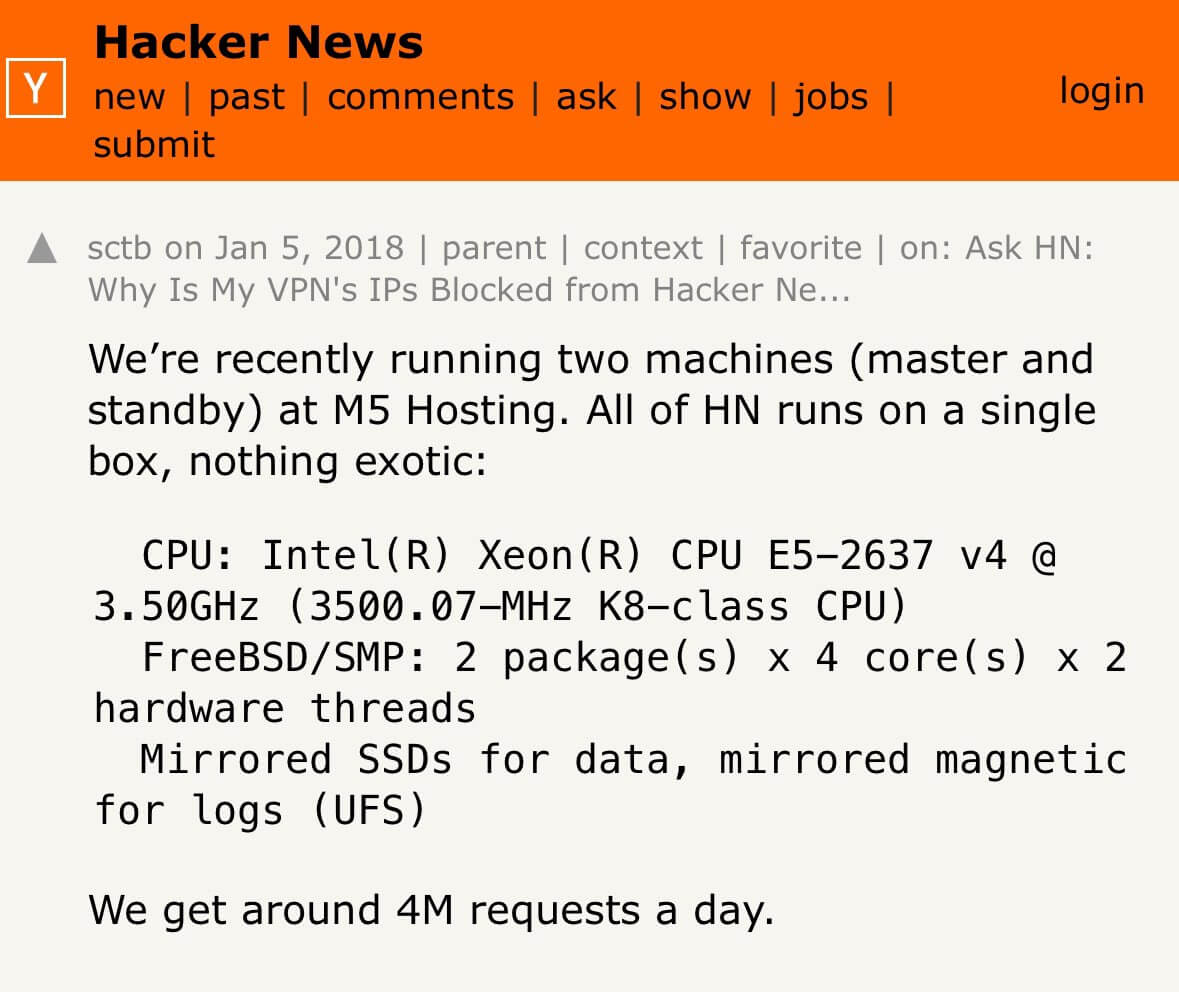

To illustrate the capabilities of a single machine, Hacker News handles 4 million requests daily while operating on just one server (with a backup) at approximately $100 per month.

The mighty VPS bootstrapper

Pieter Levels (Levelsio) is someone who now receives 250 million requests per month with 10 million monthly active users (MAU). He uses PHP and jQuery and hosts everything on a single VPS.

Developers have been domesticated into using Kubernetes, Docker and TypeScript for the most basic bloody app as if it needed infinite scaling yesterday.

Stop overengineering and start shipping

Does AWS over-engineer things? AUTOSCALABLE SYNCATIONZATION GEOSTABITIOLIATIONAL-CONFIGURATIONTIONALIZED TERABYTE-LASER ENROLMENT MECHANISM or in English, would you like to add 1TB storage?

Let’s recall a Linode Nano 1GB Plan: 1 CPU, 1 GB RAM, 25 GB SSD, 1 TB Transfer, Cost: $5/month… And vertically scale as you need.

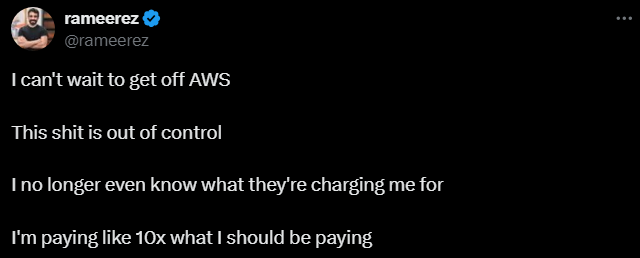

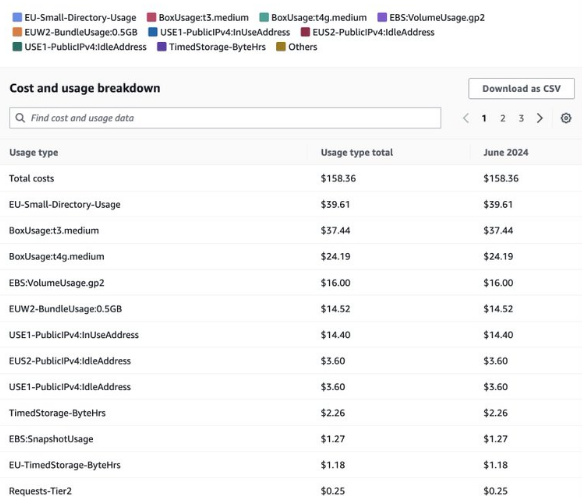

Now let’s look at AWS pricing.

Infinite scaling, infinite invoicing and an infinite mess.

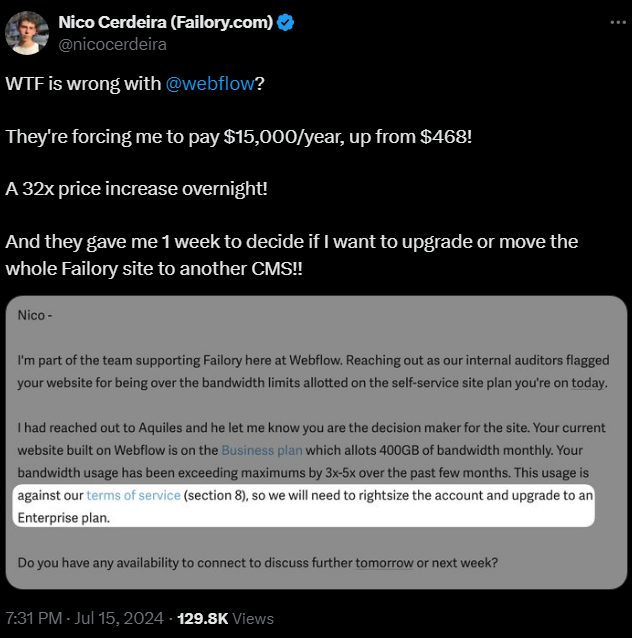

The problem with no code

No code CMS platforms also screw you over sometimes. Every no code platform has this problem of course, you know you’re signing up for vendor lock in. It’s all about convenience… Until it isn’t.

I’m putting this here to convince you to learn to code because with the VPS, you own the entire stack, and it is better to farm (attending to it for DevOps over paying the no code tax increases whenever the platform decides to tax you more).

Serverless costs more

Don’t fall into the con of “free”. Make no mistake, they are great services with great UX/DX, although once you enter your credit card details to use serverless, you may get infinite power, DX & UX, but it takes one recursive cloud function to blow up your bill and you are doomed.

Here’s an article of a company that extensively used Amazon’s Cloud and Google’s Cloud, and left.

Serverless involves less code (this is bad)

I prefer to code and have full control over what is happening all in one place.

The luxury of less code comes with vendor lock in and their price changes.

Get on a VPS!

Nginx Server and Reverse Proxy

I use Nginx as my web server and reverse proxy because it’s fast and efficient, perfect for handling high traffic. It acts as a reverse proxy, which means it routes incoming requests to the correct backend server, whether it’s a Nuxt.js app running on Node or a PHP application.

If I have multiple applications, load balancing in Nginx makes sure traffic is spread evenly between them, keeping everything reliable and responsive.

HTTP caching is another big reason. It helps speed up the site by serving cached versions of content, reducing the load on my servers and improving response times.

Nginx’s rate limiting feature is crucial for preventing abuse. It controls the flow of requests, stopping sudden traffic spikes from overwhelming the site.

Here’s a starter guide with the basics.

With Nginx as my front of house I’m able to easily serve static files or route incoming HTTP requests to my Nuxt.js app with the Node Adapter or to my PHP apps with workers.

location / {

proxy_pass http://localhost:[NODE_SERVER_PORT];

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;}

To serve static files directly.

location /assets/ {

root /path/to/static/assets;

}

I use PM2 to utilise Node.js’s cluster module to create multiple worker processes. Each Node.js worker handles requests concurrently (with a few milliseconds of difference between requests).

To work out the maximum number of workers I can have:

Formula: (Total RAM - Reserved RAM) / Avg amount of MB per Node.js process.

Example: Assuming Node.js process uses 50 MB, with 4000 MB total RAM and 20% reserved, (4000 - 800) / 50 = 64 Node.js workers.

Nuxt.js handles server-side rendering and API routes, which need to be correctly routed through Nginx by targeting the production /build.

I don’t have to handle idling configuration like in PHP-FPM because the event loop handles incoming requests dynamically (handles multiple requests asynchronously) due to the single-thread architecture.

Now I use Nginx with Node.js. Additionally, I use Nginx with PHP-FPM too. Let’s compare.

PHP-FPM:

Client Request → Load Balancer → [PHP-FPM Process 1, PHP-FPM Process 2, ..., PHP-FPM Process N]

Nginx:

Client Request → Load Balancer → [Nginx Worker 1 (handles multiple connections), Nginx Worker 2, ..., Nginx Worker N]

Node.js

Client Request → Load Balancer → [Node.js Event Loop (handles all connections via callbacks)]

There’s more you can do with Nginx, but the big sections are load balancing, caching, compression, static delivery, and logging (and monitoring).

HTTP Headers are sent with every request and response. They contain information about the request or response, such as the content type, content length, server type, and so on.

HTTP Request:

GET /index.html HTTP/1.1 Host: www.example.com User-Agent: Mozilla/5.0 Accept: text/html

HTTP Response:

HTTP/1.1 200 OK Date: Mon, 10 Oct 2023 22:38:34 GMT Content-Type: text/html; charset=UTF-8 Server: nginx/1.21.1

I have a nginx.conf symlinked to each website for my Nginx server.

sudo ln -s /websites/webiste/config/nginx.conf /etc/nginx/sites-enabled/ sudo nginx -s restart or sudo systemctl reload nginx

FYI TLS (Transport Layer Security) and SSL serve the same purpose—to secure data transmission but TLS is the updated, more secure version.

server {

listen 443 ssl;

server_name yourdomain.com www.yourdomain.com;

ssl_certificate /path/to/your_certificate.crt;

ssl_certificate_key /path/to/your_private.key;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers HIGH:!aNULL:!MD5;

location / {

root /www/html;

index index.html index.htm;

}

}

# http redirect

server {

listen 80;

server_name yourdomain.com www.yourdomain.com;

return 301 https://$server_name$request_uri;

}

The typical reload commands (make it a bash script).

sudo nginx -t sudo systemctl reload nginx sudo systemctl restart nginx sudo systemctl status nginx

HSTS (HTTP Strict Transport Security):

This tells browsers to always use HTTPS for your site in future requests.

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

Let’s Encrypt

I use Let’s Encrypt to get the SSL certificates for my sites and setup a cronjob to renew them every three months. Thanks to everyone at Let’s Encrypt!

Encrypts sensitive data like login credentials, personal information, and payment details over HTTPS.

Browsers display a padlock icon for sites using TLS, indicating a secure connection to users.

Search engines like Google favor HTTPS websites, potentially improving your site’s ranking.

It’s also necessary to meet certain legal and industry standards for all the vogons in the European Union.

Nginx uses non-blocking, event-driven architecture

When I say Nginx uses a non-threaded, event-driven architecture, it means that instead of creating a new thread for each request (like Apache does), Nginx handles many requests at once with a single process. This makes it faster and more efficient, especially under heavy traffic.

If configured correctly, it can outperform Apache because it doesn’t waste resources creating new threads. Instead, it processes requests as events, handling them without the extra overhead, which allows it to serve more users at the same time with less strain on the server.

Bash, Vim, and Tmux

I use bash for scripting, Vim for editing and Tmux for sessions/switching tabs I need to edit.

I use the Vim (btw) extension in VSCode. Lol.

If you have no time, use Nano as your text editor.

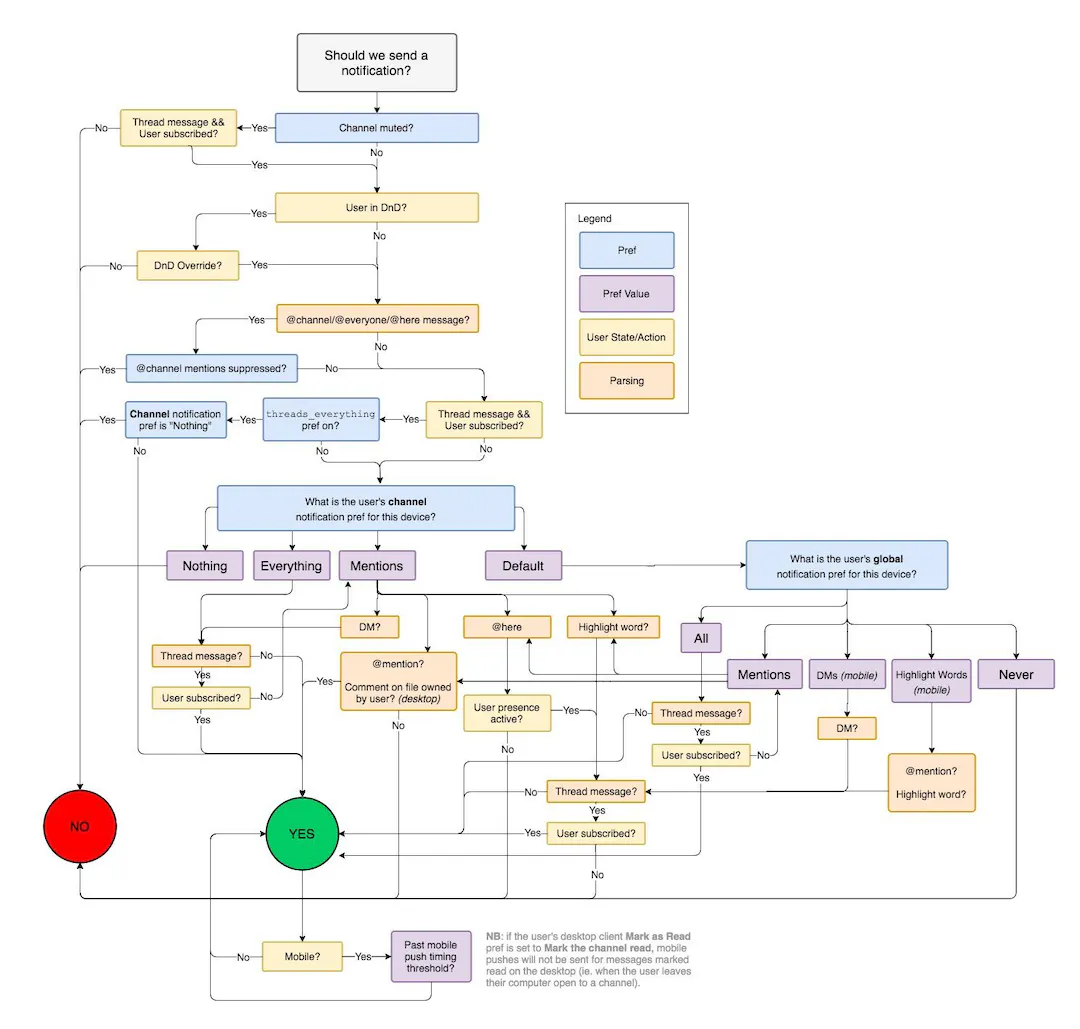

Flowcharts for system design

I use flowcharts to map out my folder structure, system design, email queue design, stripe payments, app logic, database queries, API CRUD, notifications, general functions, etc. I highly recommend Draw.io.

Here’s an example of a flowchart from Slack simply for questioning whether or not the system should send a user notification or not. And this is only one function for something like a notification…

SQLite

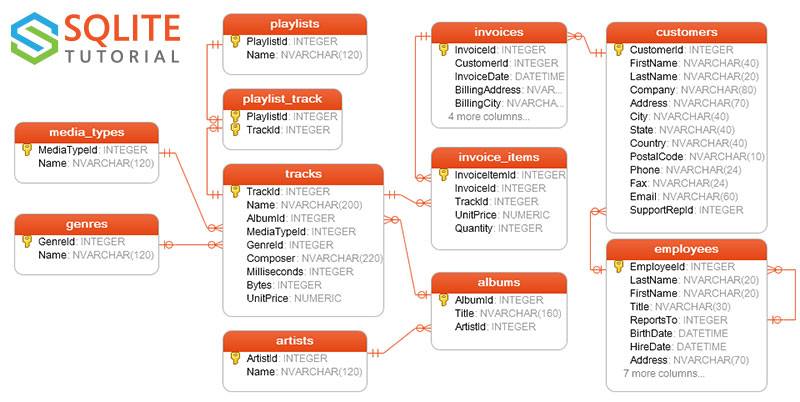

I use SQLite for most projects. I have used SQLite in three ways; with DB browser, Pocketbase and with the sqlite3 command-line interface itself.

SQLite is written in C and it’s probably one of the most tested databases in the world. SQL databases are known for ACID (Atomicity, Consistency, Isolation, Durability).

The max size of a SQLite db is 281TB. If you’ve got a database size of say 50gb, it’s quick. Once you create efficient-enough queries, it’s faster.

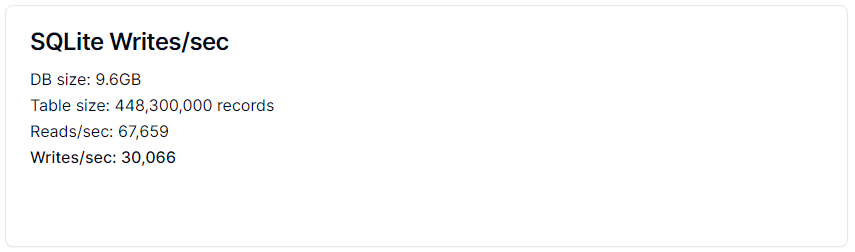

SQLite is fast because of NVMe

NVMe (Non-Volatile Memory Express) has had a major impact on SQLite’s performance. NVMe’s faster I/O operations reduce bottlenecks, allowing SQLite to handle more requests per second.

Additionally, SQLite’s WAL (Write-Ahead Logging) mode allows for concurrent reads and efficient non-blocking writes, making it suitable for many web applications that don’t require extreme concurrency (like enterprise-level apps). Most moderate web apps would benefit from SQLite’s efficient design.

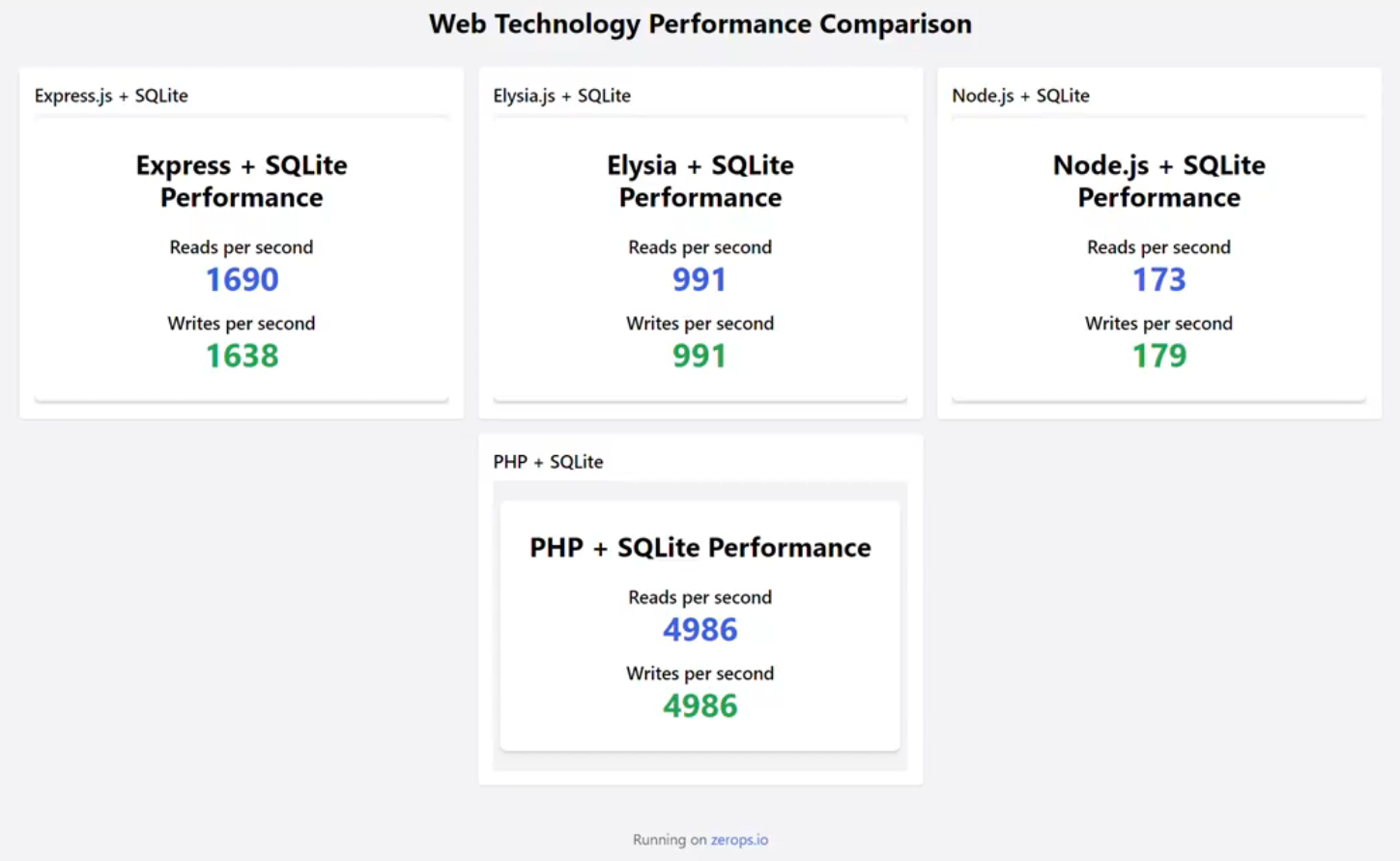

SQLite tests

With optimizations and efficient resource management a $5 Linode VPS with Nginx (PHP-FPM), PHP and SQLite in WAL mode (considering the CPU limitations, PHP workers, memory capacity, disk I/O constraints, network bandwidth bottlenecks, proper database indexing, and caching)…

SQLite will realistically handle 50 million reads per day and 3 million writes per day approximately, this is given the smallest VPS build.

Test from a $3 VPS Playground using PHP, jQuery, TailwindCSS and SQLite.

Speed of PHP and SQLite for web applications:

WAL changes the way SQLite locks when data is being written. It creates a WAL file which records changes there first, so the main db file is less likely to corrupt. It has concurrency benefits to allow multiple users to read data while another is writing.

PRAGMA journal_mode=WAL;

Here’s an article for SQLite query performance to help with slow queries.

To any new PHP developers, learn to become one with the PHP.ini:

sudo apt-get update

sudo apt-get install php{version}-sqlite3

Ensure PDO extension is enabled in php.ini file.

extension=pdo_mysql.so # For MySQL extension=pdo_sqlite.so # For SQLite

Restart your web server.

sudo systemctl restart nginx || apache

Enable and verify the extension for sqlite3 like this:

php -m | grep sqlite

SQLite wrapper (Pocketbase)

Someone created an SQLite backend called Pocketbase (although it is still in early development), making the case for a VPS even more appealing. It is a lightweight and easy-to-use solution for managing SQLite databases, ideal for small projects or quick deployments.

Things like backing up data and removing data are all automated out of the box (and a ton more) instead of having to write the bash scripts and cronjobs yourself.

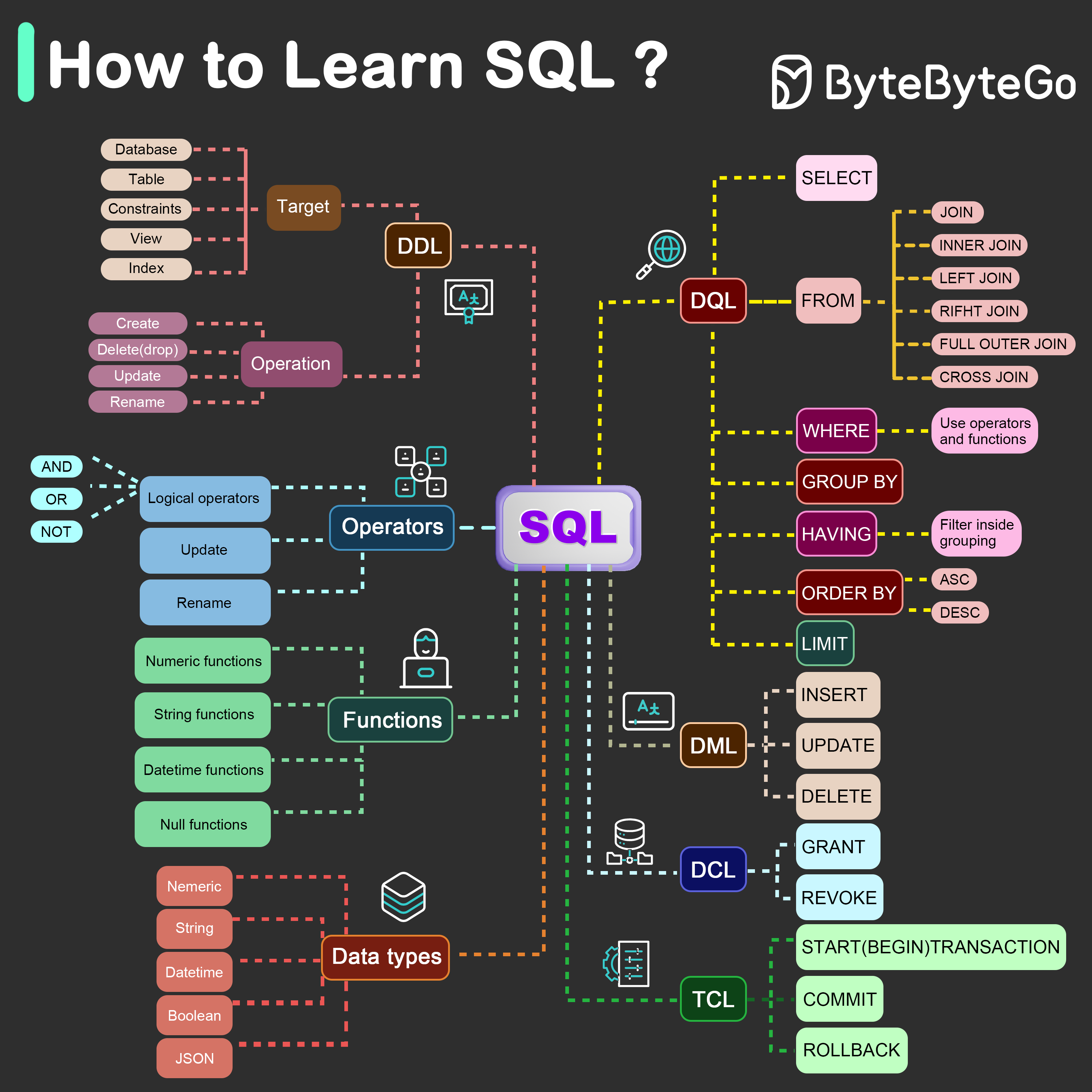

SQL in SQLite

ByteByteGo did a great graph to show you what SQL is exactly.

This is probably the greatest website to learn SQLite because you can freely interact with it.

If you’ve learned Google Sheets and Microsoft Excel, you can learn SQLite. Here’s another website on SQLite which tells you bit by bit in detail.

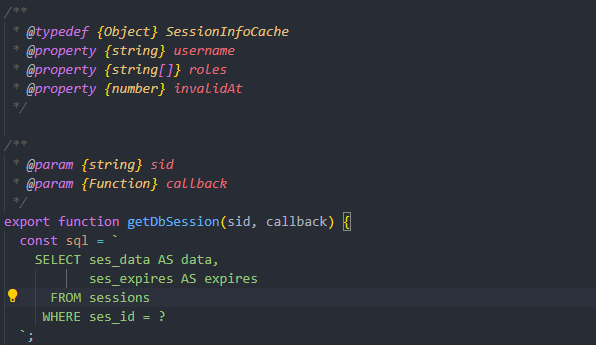

I define the types from function blocks and calls with JSDoc. I find it easier to define what the code does personally.

MongoDB w/Mongoose

MongoDB was my first NoSQL database. I have also used Firestore with Firebase Security Rules using the Security Rules language (based on the Common Express Language). But I prefer MongoDB because Google is too user friendly (a good and bad thing).

Think of JSON docs, these databases are a fully-fledged management system of collections and documents, otherwise known as the folder and files architecture.

NoSQL’s greatest pro and con is that it’s flexible.

{

"title": "Computers",

"description": "Information about computers..."

}

MongoDB uses something called BSON because, well, MongoDB.

SQLite works better for the VPS monolith strategy.

The way I code

As a kid, I played around with Scratch, Java, networking and a bit of SQL using NotePad++. It wasn’t “proper” coding, but rather a playful curiosity about what different code snippets did, how they broke, what their sensors and effectors were. I spent more time playing Age of Empires II or RuneScape. The coding I did was mostly through Cheat Engine, modifying games, and exploring admin/network testing tools like Cain & Abel (yes, I hacked the school network at 13).

I learned to code properly at 21 as a self-taught developer. I’m no prize software engineer, I just make, optimize and maintain things. Delving deep into rigorous algorithms or assembly language isn’t fun in and of itself to me. Web apps are fun because you can make an app to solve problems for people by entertainment, information or with timesavers.

I write code, comment, review, and launch (and revisit to refactor if it validates); a duct-tape programmer. I code almost entirely imperatively.

Almost all of my knowledge came from watching YouTube (thanks to the many cracked developers in India), Udemy courses, and most importantly, building projects. The key lesson I learned is that building things yourself is the most effective way to learn, you have to learn to love learning. Online learning has become even more powerful with advancements in AI.

What programming languages should you learn?

The one that gets the job done.

I learned Assembly, C, JavaScript, PHP, Python, and Bash scripting; All I do is use PHP and JavaScript really because it covers websites, cross-platform apps, games and electronics.

Imperative vs Declarative

const numbers = [1, 2, 3, 4, 5, 6];

const doubledEvens = [];

for (let i = 0; i < numbers.length; i++) {

if (numbers[i] % 2 === 0) {

doubledEvens.push(numbers[i] * 2);

}

}

console.log(doubledEvens); // [4, 8, 12]

const numbers = [1, 2, 3, 4, 5, 6]; const doubledEvens = numbers .filter(num => num % 2 === 0) .map(num => num * 2); console.log(doubledEvens); // [4, 8, 12]

Nested loops imperative

Nested loops are about processing combinations, dimensions, or dependencies. They let you break down problems with structured repetition that can’t be handled with a single loop.

1. Handling Multidimensional Data

Example: A 2D array (grid) or matrix.

Analogy: Imagine a chessboard. You need one loop to go through each row and another loop to go through each column in a row.

const grid = [

[1, 2, 3],

[4, 5, 6],

[7, 8, 9]

];

for (let i = 0; i < grid.length; i++) { // Outer loop for rows

for (let j = 0; j < grid[i].length; j++) { // Inner loop for cells in each row

console.log(grid[i][j]); // Prints every element

}

}

2. Cartesian Products

Use case: Pairing every item from one list with every item from another.

Analogy: Matching all combinations of shirts and pants in a wardrobe.

const shirts = ["red", "blue"];

const pants = ["black", "white"];

for (let i = 0; i < shirts.length; i++) {

for (let j = 0; j < pants.length; j++) {

console.log(`${shirts[i]} shirt and ${pants[j]} pants`);

}

}

3. Algorithms Requiring Comparisons

Example: Sorting algorithms or finding all pairs of elements in a list.

Analogy: Compare every student in a class with every other student to find a best match.

const numbers = [3, 1, 4];

for (let i = 0; i < numbers.length; i++) { // Outer loop selects one number

for (let j = i + 1; j < numbers.length; j++) { // Inner loop compares with rest

console.log(numbers[i], numbers[j]);

}

}

4. Iterating Over Patterns

Example: Generating a pyramid or grid-like structure.

Analogy: Printing rows of stars where each row has an increasing number of stars.

for (let i = 1; i <= 3; i++) { // Outer loop controls rows

let row = '';

for (let j = 0; j < i; j++) { // Inner loop controls stars in a row

row += '*';

}

console.log(row);

}

Output:

* ** ***

Normalisation

When working with external APIs or RSS feeds, you’ll often need to normalize the data to make it consistent and usable. Here’s a simple example, using a job board example:

// multiple raw feed sources with different structures

const jobFeeds = {

indeed: [

{

title: "Senior Dev",

location: { city: "NYC", country: "US" },

compensation: { min: 80000, max: 100000, currency: "USD" }

}

],

linkedin: [

{

role_name: "Senior Developer",

geo: "New York, United States",

salary: "$80k-$100k/year"

}

],

glassdoor: [

{

position_title: "Sr. Developer",

work_location: "Remote - US",

pay_range: "80000-100000",

employment_type: "FULL_TIME"

}

]

};

// complex normalizer with multiple transformers

const normalizeJobs = (feeds) => {

const normalized = [];

// source-specific normalizers

const normalizers = {

indeed: (job) => ({

title: job.title,

location: `${job.location.city}, ${job.location.country}`,

salary: `$${job.compensation.min/1000}k-${job.compensation.max/1000}k`,

source: 'indeed'

}),

linkedin: (job) => ({

title: job.role_name,

location: job.geo,

salary: job.salary.replace('/year', ''),

source: 'linkedin'

}),

glassdoor: (job) => ({

title: job.position_title,

location: job.work_location,

salary: `$${parseInt(job.pay_range.split('-')[0])/1000}k-${parseInt(job.pay_range.split('-')[1])/1000}k`,

source: 'glassdoor'

})

};

// process each feed source

for (const [source, jobs] of Object.entries(jobFeeds)) {

const normalizer = normalizers[source];

jobs.forEach(job => {

try {

const normalizedJob = normalizer(job);

// additional standardization

normalized.push({

...normalizedJob,

title: standardizeTitle(normalizedJob.title),

location: standardizeLocation(normalizedJob.location),

salary: standardizeSalary(normalizedJob.salary),

dateAdded: new Date(),

id: generateUniqueId(normalizedJob)

});

} catch (error) {

console.error(`Failed to normalize ${source} job:`, error);

}

});

}

return normalized;

};

// example of complex title standardization

const standardizeTitle = (title) => {

const titleMap = {

'Sr.': 'Senior',

'Jr.': 'Junior',

'Dev': 'Developer',

// ... could be hundreds of mappings

};

let standardized = title;

Object.entries(titleMap).forEach(([from, to]) => {

standardized = standardized.replace(new RegExp(from, 'gi'), to);

});

return standardized;

};

// Usage

const normalizedJobs = normalizeJobs(jobFeeds)

.filter(job => {

// Complex filtering logic

return (

isValidSalary(job.salary) &&

isDesiredLocation(job.location) &&

!isDuplicate(job) &&

isRelevantTitle(job.title)

);

})

.sort((a, b) => getSalaryValue(b.salary) - getSalaryValue(a.salary));

This example shows:

- Handling inconsistent field names

- Standardizing salary formats

- Filtering based on normalized data

- Creating a consistent output structure

- Different date formats across feeds

- Various salary representations

- Location formatting differences

- Title inconsistencies

- Duplicate detection

- Error handling

- Performance considerations

- Regular expression maintenance

- Currency conversions

PHP stack

I made a mini PHP framework which was inspired by Codeignitor. I write everything from scratch, even the router. I use jQuery for the front and PHP for the back.

Lemme break it down because this stack is FAST for web apps, one pagers or automations. I’ll cover auth later but this is how I do most things:

Event capture:

Use .on() to listen for a button click, then send AJAX to PHP.

$('#submit').on('click', function() {

const data = { name: 'Joe', age: 26 };

// Send AJAX request

$.ajax({

url: '/api/update.php',

method: 'POST',

data: JSON.stringify(data),

contentType: 'application/json',

success: function(response) {

// Capture response from PHP

console.log('Server response:', response);

}

});

});

Backend (PHP):

Update SQLite database and return a response.

$json_input = file_get_contents('php://input'); // Get JSON from request

$data = json_decode($json_input, true); // Decode it

// Update SQLite

$db = new PDO('sqlite:data/app.db');

$stmt = $db->prepare('UPDATE users SET email = :email WHERE uuid = :uuid');

$stmt->execute([':email' => json_encode($data), ':uuid' => 'some-uuid']);

// Return success response

echo json_encode(['status' => 'updated']);

Parsing explanation

Parsing means taking data in one format (like a JSON string) and converting it into a usable structure (like an array or object). In this case, we receive a JSON string from the frontend and decode (parse) it into a PHP array.

$json_input = file_get_contents('php://input'); // Get JSON from request

$data = json_decode($json_input, true); // Decode (parse) JSON string into PHP array

json_decode() converts the data to PHP’s format. To elaborate, I mean this is the parsing function to convert the raw JSON input into a PHP array I can work with.

Encoding and Decoding JSON in PHP

JSON Encoding

Convert a PHP array or object into a JSON string using json_encode().

// PHP array

$data = [

'name' => 'Joe',

'age' => 26,

'email' => 'joe@example.com'

];

// Encode the array into JSON

$json_data = json_encode($data);

echo $json_data; // Output: {"name":"Joe","age":26,"email":"joe@example.com"}

JSON Decoding

Convert a JSON string back into a PHP array or object using json_decode().

// JSON string

$json_string = '{"name":"Joe","age":26,"email":"joe@example.com"}';

// Decode the JSON string into a PHP array

$decoded_data = json_decode($json_string, true); // true converts to an associative array

// Access individual data

echo $decoded_data['name']; // Output: Joe

echo $decoded_data['age']; // Output: 26

THROWS JSON INTO SQLITE

json_encode() converts PHP arrays/objects into JSON format (a string).

json_decode() parses the JSON string into PHP arrays or objects (when true is passed, it returns an associative array).

Response Flow

Frontend: AJAX sends data → Backend: PHP updates SQLite → Backend sends JSON response → Frontend logs response.

Variable Declaration and Loops (most of the web lol) in jQuery and PHP.

Variable Declaration

jQuery: let or const for JS.

PHP: $variable = 'value';.

// jQuery variable let name = 'Joe'; // PHP variable $name = 'Joe';

PHP vs Node.js Blocking I/O and Concurrency

PHP is a server-side scripting that is an intrepeted language, which means it is executed line by line on the server. I use it to dynamically generate things on the web.

PHP does not natively support concurrency, it uses blocking I/O, meaning each request waits for operations (like database queries) to finish before moving to the next line of code. HOWEVER with PHP-FPM, concurrency is achieved by spawning multiple worker processes to handle requests in parallel. Each worker handles one request at a time, so the number of concurrent requests is limited by the max_workers (e.g., pm.max_children in the config). If all workers are busy, new requests must wait.

With PHP concurrency, it’s known as parallelism which means executing multiple tasks simultaneously on multiple cores or processes. In PHP-FPM, each worker is a separate process, and each can process a request independently at the same time.

Whereas Node.js handles tasks concurrently using a single thread. It’s not executing multiple tasks at the same moment but efficiently manages multiple tasks by switching between them as needed.

Single-threaded relates to the CPU, as a quick tech note, CPU’s have multiple cores, each core can handle multiple threads,

Node.js uses non-blocking I/O and an event-driven model, allowing it to handle many requests at once within a single thread. Node.js doesn’t wait for tasks like database queries to complete—it moves on to other tasks and uses callbacks to handle the results later. This allows Node.js to efficiently manage thousands of concurrent connections without needing additional workers or processes.

The event loop is the core mechanism that manages asynchronous callbacks. When an I/O operation is initiated, Node.js offloads it to the system, allowing the main thread to continue processing other tasks. Once the I/O operation completes, its callback is queued and executed by the event loop.

Nuxt.js Node.js Nginx and PM2 stack

For larger projects I tend to use Nuxt.js due to the page router. Nuxt.js has everything for dynamic reactivity, props, logic, events, bindings, lifecycles, stores, actions, and APIs.

Notably, the modules such as the content module for markdown and SEO.

React Native for iOS and Andriod Development

I started with React.js. React Native is similar but it’s for iOS and Android development. Why do I like it? I don’t have to learn Java, Kotlin, Swift, Objective-C or even Dart from Flutter; JavaScript everywhere, one codebase and one language. I can just build something.

What is built with React Native? Tesla app, Starlink App, X (Twitter) app, Coinbase, Coinbase Wallet, Facebook, Instagram, Discord, Signal, AirBnb, Instacart, Shopify, Skype, Uber Eats, and much more companies use React Native.

The creator of React Native Web, Nicolas Gallagher worked at Twitter while implementing it for them. He’s now on the React Core team at Meta.

I know many specialised software engineers hate it but hey if I can use JavaScript, I will.

What about other languages?

Atwood’s Law states “Any application that can be written in JavaScript, will eventually be written in JavaScript.”

I have used Python, C, and C++ (for UE5), however I only need JavaScript.

JAVASCRIPT ON THE FRONT, IN THE BACK, IN-BETWEEN AND EVERYWHERE.

I’m at the top of mount abstraction

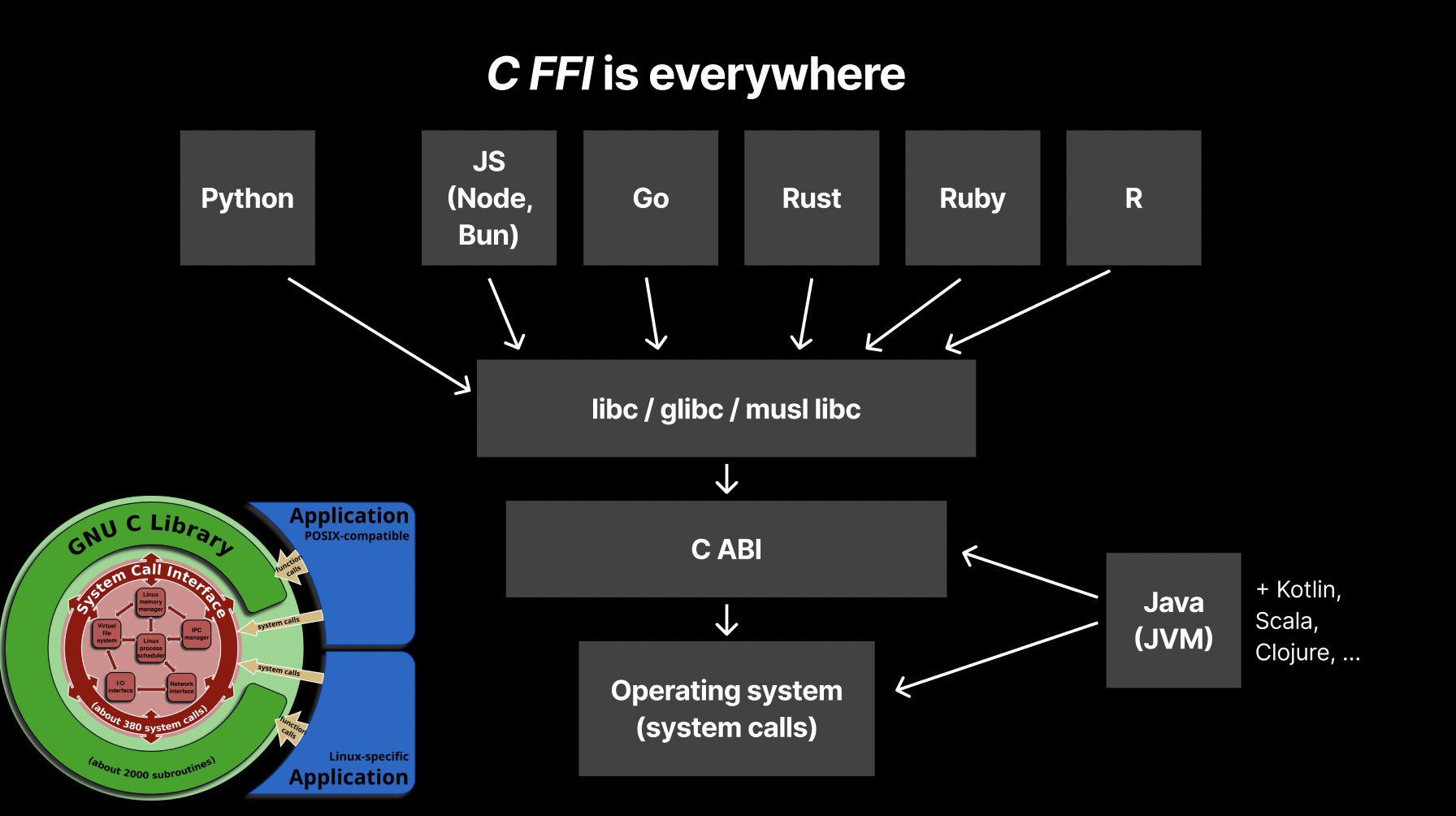

PHP/Node.js interact with system-level libraries through C’s Foreign Function Interface (FFI); libc, glibc, and system calls.

It’s all built on C.

TailwindCSS

I use TailwindCSS to style almost everything. It isn’t like Bootstrap with fixed components that have defined properties, in TailwindCSS to apply margin or padding, you type p-[int]. Put in any integer you want. Whereas Bootstrap has a limit of up to 5 I think? This is one of many things…

This is good ol’fashion CSS:

.btn-red {

background-color: red;

color: white;

padding: 8px 16px;

border: none;

border-radius: 1000px;

font-weight: bold;

cursor: pointer;

transition: background-color 0.3s;

}

.btn-red:hover {

background-color: darkred;

}

And this is TailwindCSS that does the same thing, and doesn’t require you to switch pages as much to your style sheets.

class="text-white bg-red-500 hover:bg-red-700 font-bold py-2 px-4 rounded">

Thoughts on we makers

“You’re just plugging APIs together”. A most deadly gaslight because every developer is a digital plumber fitting APIs, databases and working with networks. If it were simply gathering data and using an API every developer would be rich by this kind of logic.

But to find even your first dollar, you have to build value for people and constantly show up to work on it. Building your app is just the start.

Turning up and serving your customers, marketing and validating your idea to the market are the things people tend to undervalue. Others are what matter, so get building.

Caching

Cloudflare solves every caching issue.

But I’ve also used Nginx itself for static asset caching:

location ~* \.(js|css|png|jpg|jpeg|gif|ico|svg|webp)$ {

expires 30d;

add_header Cache-Control "public, no-transform";

}

Page-level caching with Nginx FastCGI caching:

http {

fastcgi_cache_path /var/cache/nginx levels=1:2 keys_zone=FASTCGI:10m inactive=60m;

fastcgi_cache_key "$scheme$request_method$host$request_uri";

}

By enabling cachign in the server block:

server {

location ~ \.php$ {

fastcgi_cache FASTCGI;

fastcgi_cache_valid 200 60m;

fastcgi_cache_use_stale error timeout invalid_header;

fastcgi_pass unix:/run/php/php-fpm.sock;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

}

}

Automation is key

I use webhooks, scripts and logging via cronjobs. Cronjobs are seriously the underlying factor of automating your life. Track anything, events, button clicks, email queues, session logs, whatever. Under the hood, my machines are comprised of bash, JS, and shell scripts (a shell executes code on the OS).

It’s all about that crontab

Use this website called Crontab Giru.

Cron provides several special strings for scheduling tasks, including @reboot to run a command at startup, @yearly or @annually for once-a-year execution, @monthly for monthly tasks, @weekly for weekly runs, @daily or @midnight for daily execution, and @hourly for hourly tasks, allowing for easy and readable scheduling without needing to specify exact times.

Using @daily as an exampme:

@daily php /path/to/your/script.php @daily /path/to/your/script.sh

The next step is to use AI to control and monitor my server actions. I think we’ll reach a point soon where one can say “update this button, and deploy it to the server”, and the AI will do it for you.

Log rotation

Rotating logs means moving old logs to another file, compressing them, and starting fresh logs. This is done to prevent logs from getting too large. Don’t do this manually, make sure it is setup with cron and use the tool logrotate.

sudo logrotate -f /etc/logrotate.d/nginx

Compressed logs will appear like this by default (I think this is by default at least):

access.log.1.gz error.log.1.gz

The current active logs are these:

access.log error.log

Open the config file for Nignx in logrotate to see the rotation frequency, number of logs to keep and compression setting.

sudo vim /etc/logrotate.d/nginx

To-do lists

I use Trello to track tasks. It helps me gamify getting things done. Completing my Trello board allows me to climb my leaderboard. That’s it? Yes. I use Trello and checklists inside cards to manage all work processes.

Git, GitHub, GitHub Actions and CoPilot

I use GitHub for everything. GitHub is the mothership. Version control, actions, and storage are perfect.

GitHub copilot currently uses GPT-4 as the base model. It’s fantastic to use the commands /explain and /fix to find understanding or catch errors.

I use GitHub Actions for CI/CD.

This is so useful because you can isolate your master branch as the one that is pushed to your VPS. Totally automated for hosting. Use Actions, seriously, a simple git push origin master and you’re all good to go.

For Node adapters when you use ‘npm run build’, it is recommended to deploy the compiled build folder: /build, this is because you’re not slapping all your node modules on the server… You don’t need your entire repository to host your app. Push the build folder fool.

GitLens - Git Supercharged

One of the most well-known extensions for any developer using VSCode is GitLens, an open-source extension with millions of downloads. This extension allows you to fully develop without having to leave your editor. For instance, use it to interactively publish public/private repositories when you start a new project. To add the remote connection in your local dev environment, do git init, git add ., git commit -m "first commit" (usually you go to Github, create a repo, and add the remote ref to your local dev), now go to Gitlens, click on publish branch, select private or public, name it, and you are done.

It does so much more and will save you a lot of time across time.

VSCode is the best code editor

I use VSCode with Vim bindings. I think it’s great because the views, layout and windows are the easiest to work with. This matters a lot to me. I’m making a course to teach the best tips and tricks for VSCode if you’re interested to level up.

Mount Volumes, Block Storage and CDNs

If you’re hosting an application that collects user data, it’s important to manage your storage effectively. Typically, data is stored directly on the VPS, but as the database grows, it could outgrow the disk that also hosts the OS and applications. It’s generally better to isolate your user data from your hosting data and OS disk.

All major VPS providers offer volumes or block storage that you can attach and detach from your VPS. This solution is cost-effective, with around $2 for 100GB, and is perfect for exclusively storing your database.

To ensure your data is well-managed, you can mount a new volume to your VPS. Start by formatting the volume and then mount it to your filesystem. Make sure to configure the system so the volume mounts automatically on reboot. Finally, update your application configuration to use the new storage location and restart the necessary services. This way, your growing database remains well-managed and doesn’t interfere with the operation of your OS and applications.

Here’s an example for Pocketbase’s db scaling.

# Connect to your Linode VPS # List all block devices to identify the new volume lsblk # Format the new volume (replace /dev/sdX with your volume identifier) sudo mkfs.ext4 /dev/sdX # Create a directory to serve as the mount point sudo mkdir -p /mnt/blockstorage/pb_data # Mount the volume to the newly created directory sudo mount /dev/sdX /mnt/blockstorage # Set appropriate permissions for the directory sudo chmod -R 755 /mnt/blockstorage/pb_data # Ensure the volume mounts on system reboot echo "/dev/sdX /mnt/blockstorage ext4 defaults,nofail 0 0" | sudo tee -a /etc/fstab # Modify the Pocketbase service configuration to use # the new storage directory sudo nano /etc/systemd/system/pocketbase.service # Update the ExecStart line to: # ExecStart=/root/apps/guestbook/pocketbase serve --http="127.0.0.1:8090" --dir="/mnt/blockstorage/pb_data" # Save and exit the editor (Ctrl+O, Enter, Ctrl+X) # Reload the systemd daemon sudo systemctl daemon-reload # Restart the Pocketbase service sudo systemctl restart pocketbase # Restart the Nuxt.js service (assuming you have a service file for Nuxt.js) sudo systemctl restart nuxtjs

Payments

I use the Stripe API. The Stripe CLI is great, and I recommend that you don’t build your own payment flow…

I tend to use Gumroad as a payment provider too, which is a great experience for selling digital products. I highly recommend them too.

Emails

There are many email API providers. I use Postmarkapp. You can use Nodemailer or PHPMail. Use whatever works best for you. Postmarkapp’s API simplifies everything, and it’s had 100% hit rate thus far.

Optimisation

For PHP projects, I minimize JS and CSS files to keep things speedy. I also use gzip to compress files for faster loading. When I use Nuxt.js, it automatically handles a lot of the optimization, like minimizing and bundling files, which makes things easier.

Health checks

Create one document that has all your checklists on it, a global document that runs cronjob checks and is fed information by logs. If there’s an error, make sure it sends you an email or sends you a message through an API chat such as Telegram.

UptimeRobot. If your server goes down, UptimeRobot will report the 500 status message instantly to you.

I’ve had 99.999% server uptime, the downtime is when I update or break something.

DevOps

DevOps is a digital janitor job. It’s very important to keep things clean. You have to maintain things over time for scale, security and reliable hosting.

Command Line Tools

Fundamental tools for network troubleshooting and config:

ping helps verify network connectivity between your machine and another.

arp -a allows you to see the mapping of IP addresses to MAC addresses on your local network, which can help diagnose duplicate IP issues or unauthorized devices.

traceroute shows the path your data takes to reach a destination, helping identify where delays or failures occur in the network.

ifconfig, ipconfig, and ip address display network interface configurations, enabling you to check or modify your IP settings.

Networks & Protocols

Start here if you no absolutely nothing: HTTP from Scratch.

I quickly learned that much of my work involves dealing with TCP (Transmission Control Protocol) ports. So here is what you will need to know: SMTP (Simple Mail Transfer Protocol) is used for sending emails and operates on port 25. HTTP (HyperText Transfer Protocol) is the foundation of data communication on the web and uses port 80. HTTPS (HyperText Transfer Protocol Secure) is the secure version of HTTP using SSL/TLS encryption and operates on port 443. FTP (File Transfer Protocol) transfers files between client and server and typically uses port 21 for control commands and port 20 (or other ports) for data transfer. SSH (Secure Shell) provides secure access to remote computers and operates on port 22.

Here is a list of keywords to search and read up on: Port Forwarding, TCP Ports, Switches and ARP (Address Resolution Protocol), Private IP Address Blocks, TCP/IP Address and Subnet Mask, TCP/IP Protocol Suite, Layer 3 Networking, Layer 2 Networking, MAC Address (Media Access Control Address), TCP/IPv6 (Transmission Control Protocol/Internet Protocol Version 6), Protocols, Caching, Routers and Default Gateways, Modems, Static IP Addresses, Firewalls, DHCP (Dynamic Host Configuration Protocol), DNS (Domain Name System), IP Addressing, Subnetting, Ports, SSH (Secure Shell), SSL (Secure Sockets Layer) / TLS (Transport Layer Security), UDP (User Datagram Protocol) / TCP/IP (Transmission Control Protocol/Internet Protocol) Protocols, HTTP (HyperText Transfer Protocol), HTTPS (HyperText Transfer Protocol Secure), FTP (File Transfer Protocol), SMTP (Simple Mail Transfer Protocol), and NTP (Network Time Protocol).

SSL (Secure Sockets Layer) is the outdated name for TLS (Transport Layer Security). SSL/TLS are essentially the same in the context of securing communications, but TLS is the updated and more secure version.

Here is the list of port numbers, you’ll want to learn what is what.

Process monitoring

This is task manager’s performance tab in Windows but in Linux. It’s called Top instead however I recommend using btop over top.

sudo snap install btop

Ansible

You will need to learn YAML to write a script for Ansible. But what even is it?

Ansible goes beyond crontab by managing the entire lifecycle of your servers and applications. You can automate everything from database backups to setting up a new machine with firewalls, databases, users and the apps you use.

While managing a single server with rare changes might make Ansible seem like overkill, it’s a perfect tool for automating updates and upgrades consistently and efficiently.

I use Ansible to simplify my server management. Despite the learning curve, the time saved and reduced errors make it well worth the investment.

Below is a basic example that, while missing some (by some I mean lots of) configurations, demonstrates how you can automate everything in one setup file: server_deploy.yaml.

- name: automate server setup and manage config on debian

hosts: webservers

become: yes # gain sudo privileges

vars:

nodejs_version: "14.x" # Specify the Node.js version you want to install

nginx_config_source: "./templates/nginx.conf.j2" # Path to your Nginx template

nginx_config_dest: "/etc/nginx/sites-available/default" # Destination path on the server

tasks:

# update and upgrade the system

- name: Update APT package index

apt:

update_cache: yes

- name: Upgrade all packages to the latest version

apt:

upgrade: dist

# install packages

- name: Install essential packages

apt:

name:

- curl

- git

- sqlite3

- build-essential

state: present

# install node.js

- name: Add NodeSource APT repository for Node.js

apt_repository:

repo: "deb https://deb.nodesource.com/node_ main"

state: present

- name: Add NodeSource GPG key

apt_key:

url: "https://deb.nodesource.com/gpgkey/nodesource.gpg.key"

state: present

- name: Update APT package index after adding NodeSource repo

apt:

update_cache: yes

- name: Install Node.js

apt:

name: nodejs

state: present

# install nginx

- name: Install Nginx web server

apt:

name: nginx

state: present

# manage nginx setup

- name: Deploy custom Nginx configuration

template:

src: ""

dest: ""

owner: root

group: root

mode: '0644'

notify:

- Reload Nginx

# nginx enabled and started

- name: Ensure Nginx is enabled at boot

systemd:

name: nginx

enabled: yes

- name: Ensure Nginx service is running

systemd:

name: nginx

state: started

# nginx configuration

- name: Allow 'Nginx Full' profile through UFW

ufw:

rule: allow

name: 'Nginx Full'

when: ansible_facts['pkg_mgr'] == 'apt'

# permissions

- name: Ensure /var/www has correct permissions

file:

path: /var/www

owner: www-data

group: www-data

mode: '0755'

state: directory

Security

Code reviews are a regular part of my routine. It’s a useful way to catch mistakes and improve the overall quality of the code. Auditing your code and server with AI is highly recommended as an added filter.

Implement secure SSH key management on your VPS server by generating an SSH key pair on your local machine and adding the public key to the server’s authorized_keys file, make sure to do only key-based authentication, and disable password logins because brute forcing is easy. Create a user account and limit the user permissions, never use root.

I use UFW (Uncomplicated Firewall). Permit only essential ports to allow incoming connections on port 22 for SSH and ports 80 and 443 for HTTP/HTTPS traffic to your apps, with Nginx as your web server ofc.

I use Fail2Ban to help protect against brute-force attacks by temporarily banning IP addresses that show suspicious activity, such as multiple failed login attempts.

Install Fail2Ban:

sudo apt update && sudo apt install fail2ban

Create a local configuration file:

sudo cp /etc/fail2ban/jail.conf /etc/fail2ban/jail.local

Edit the local configuration file:

sudo nano /etc/fail2ban/jail.local

This is an example configuration that protects Nginx by monitoring the log at /var/log/nginx/error.log and protects SSH by monitoring the log at /var/log/auth.log.

[nginx-http-auth] enabled = true filter = nginx-http-auth logpath = /var/log/nginx/error.log maxretry = 5 bantime = 3600 findtime = 600 action = iptables-multiport[name=nginx, port="http,https", protocol=tcp] [sshd] enabled = true port = ssh filter = sshd logpath = /var/log/auth.log maxretry = 3 bantime = 3600

Nginx: locks an IP after 5 failed attempts within 600 seconds (10 minutes). Bans the IP for 3600 seconds (1 hour). Blocks both HTTP (port 80) and HTTPS (port 443) using iptables.

SSH: Blocks an IP after 3 failed SSH login attempts. Bans the IP for 3600 seconds (1 hour).

Start and enable Fail2Ban:

sudo systemctl start fail2ban sudo systemctl enable fail2ban

Check log files here:

sudo tail -f /var/log/fail2ban.log

Check the status like this:

sudo fail2ban-client status nginx-http-auth

I use the built-in PDO when I work with databases in PHP. It’s reliable and helps keep everything neat and tidy. Every input and output must be sanitized. This means to clean up any data going into or out of the database to avoid SQL injection.

If your VPS has a ton of traffic and you’re unsure about security, consider hiring someone specialising in Cyber Security/DevOps for an audit. There are loads of talent who’ve put all their skill points in this skill tree.

I highly recommend using Yubico for hardware-enforced 2FA. Get two YubiKeys (primary and a backup).

Backups

When you get a VPS from providers like Linode or DigitalOcean, they usually offer multiple daily backups, which is super handy. But there’s more you can do to keep your data safe.

You can set up multi-region off-site backups too. Services like AWS S3, Google Cloud Storage, or Backblaze B2 let you store your data in different locations. This adds an extra layer of security and ensures you can recover it even if something goes wrong in one region.

Standby machine

You can configure a standby machine to be a mirrored replica of the main server.

# install mdadm for RAID management sudo apt-get install mdadm # create RAID 1 for SSDs (assuming /dev/sda and /dev/sdb) sudo mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/sda /dev/sdb # format RAID array with ext4 filesystem sudo mkfs.ext4 /dev/md0 # mount RAID array sudo mkdir /mnt/raid1 sudo mount /dev/md0 /mnt/raid1 # ensure automatic mounting at boot echo '/dev/md0 /mnt/raid1 ext4 defaults 0 0' | sudo tee -a /etc/fstab

Syncing data every 5 minutes using rsync:

*/5 * * * * rsync -avz /mnt/raid1/ user@standby_linode:/mnt/raid1/

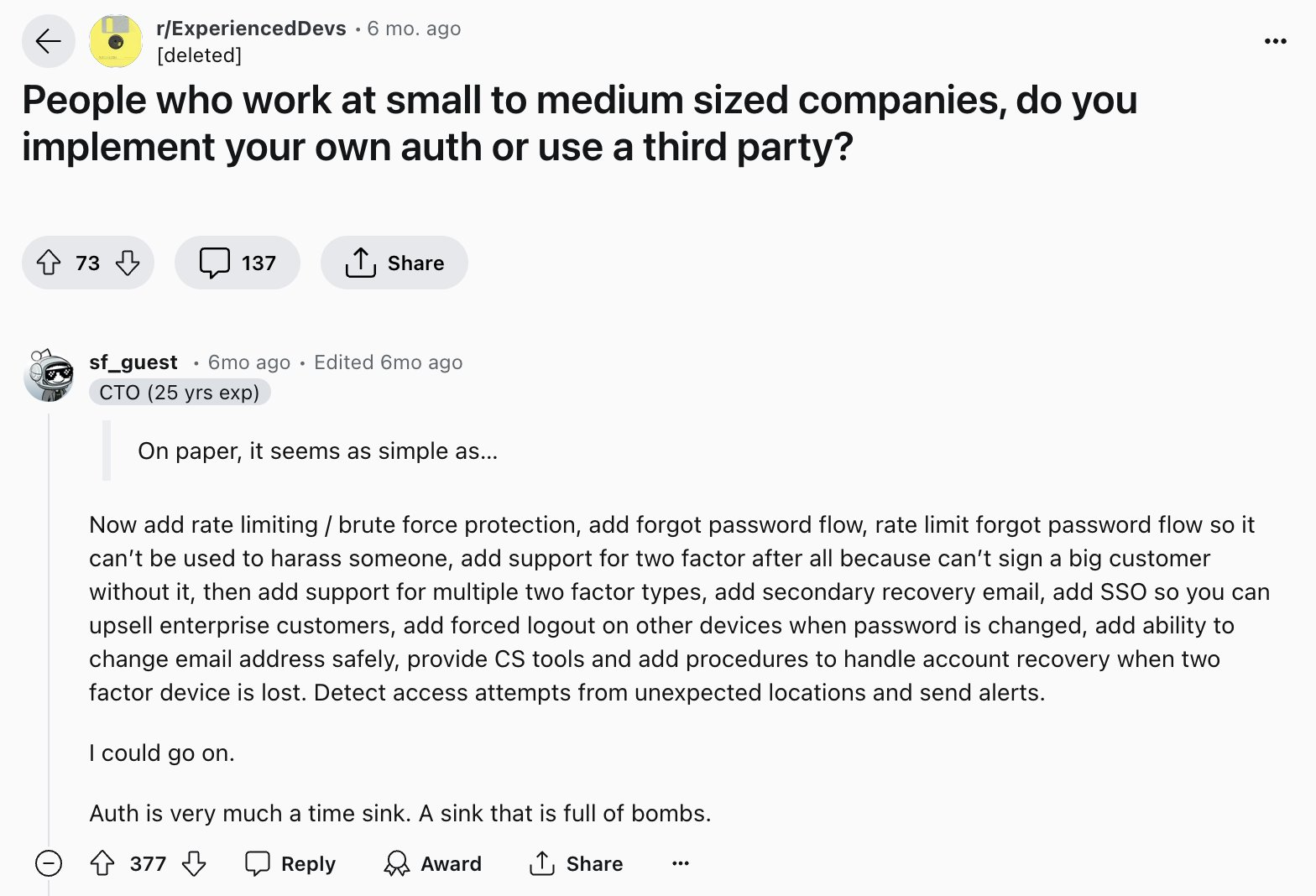

Auth, OAuth and 2FA

When it comes to auth, I never save passwords or payment details, only emails, Sign in with Google, and the Stripe tokens. Yes I know not everyone prefers magic links but it has a great deal of benefits.

I think it’s better to take the heat from the outspoken haters for the majority of your users because of three core reasons: Uno, humans are very predictable and they use the same passwords over and over again, which isn’t secure. Dos, development efficiency, magic links streamline auth, and they’re readily available libraries on GitHub or Google reducing development effort. Tres, I argue that magic link auth is a better user experience overall because users don’t need to remember complex passwords.

Despite most people being accustomed to passwords, I believe magic links are worthwhile due to the numerous issues with passwords. While it does feel like swimming against the current, the benefits of security and user experience make it the better choice. Email only or Google Sign in ftw.

Auth with email-only is essentially; login, add a randomized hash to your database, email the link to the user with the hash, they click it, login the user with a cookie, track cookie, and there is your auth.

Thoughts on frameworks

If you don’t have a framework “You’ll end up building your own shitty JS framework, and the last thing the world needs is another JS framework.” - Fireship.io

I have dabbled with most of the big frameworks to test and figure out what I wanted to develop with; Next.js, React.js (with the Router), SvelteKit, React Native (for mobile), Nuxt.js, Laravel, Codeignitor, and I have learned that the best framework is the one that actually gets your product launched in a reasonable amount of time.

Here are a few videos on frameworks, languages and over-engineering to describe the mess of the web if you’re brand new.

But what should you use?

There are the tools and there are the goals, let’s not confuse them.

“What do you want to build?”. That is the question. I’m answering that question with Nuxt.js, LEMP, Laravel, and React Native.

It’s all logic, protocols and networking, fundamentally. Maybe you just need to serve a index.html and you’re good…

What VPS providers do you recommend?

The top tier VPS cloud realms are Linode, DigitalOcean, Hetzner (great in the EU), and Vultr.

How do you scale on a VPS?

You upgrade the instance to a more powerful one to get more compute allocation where and when you need it.

A VPS meme for good measure

many such cases pic.twitter.com/0APABzxq8z

— Klaas (@forgebitz) October 11, 2024

VPS recommendation

Sign up to Linode with my referral and you’ll receive $100, 60-day credit.

Code resources

Learning to code? I made a handy list of links to get you started.